Shopping cart

Your cart empty!

Terms of use dolor sit amet consectetur, adipisicing elit. Recusandae provident ullam aperiam quo ad non corrupti sit vel quam repellat ipsa quod sed, repellendus adipisci, ducimus ea modi odio assumenda.

Lorem ipsum dolor sit amet consectetur adipisicing elit. Sequi, cum esse possimus officiis amet ea voluptatibus libero! Dolorum assumenda esse, deserunt ipsum ad iusto! Praesentium error nobis tenetur at, quis nostrum facere excepturi architecto totam.

Lorem ipsum dolor sit amet consectetur adipisicing elit. Inventore, soluta alias eaque modi ipsum sint iusto fugiat vero velit rerum.

Sequi, cum esse possimus officiis amet ea voluptatibus libero! Dolorum assumenda esse, deserunt ipsum ad iusto! Praesentium error nobis tenetur at, quis nostrum facere excepturi architecto totam.

Lorem ipsum dolor sit amet consectetur adipisicing elit. Inventore, soluta alias eaque modi ipsum sint iusto fugiat vero velit rerum.

Dolor sit amet consectetur adipisicing elit. Sequi, cum esse possimus officiis amet ea voluptatibus libero! Dolorum assumenda esse, deserunt ipsum ad iusto! Praesentium error nobis tenetur at, quis nostrum facere excepturi architecto totam.

Lorem ipsum dolor sit amet consectetur adipisicing elit. Inventore, soluta alias eaque modi ipsum sint iusto fugiat vero velit rerum.

Sit amet consectetur adipisicing elit. Sequi, cum esse possimus officiis amet ea voluptatibus libero! Dolorum assumenda esse, deserunt ipsum ad iusto! Praesentium error nobis tenetur at, quis nostrum facere excepturi architecto totam.

Lorem ipsum dolor sit amet consectetur adipisicing elit. Inventore, soluta alias eaque modi ipsum sint iusto fugiat vero velit rerum.

Do you agree to our terms? Sign up

In a development that has raised eyebrows across Washington’s cybersecurity establishment, the acting head of the United States’ top cyber defence agency reportedly shared sensitive internal documents with a public version of ChatGPT, triggering automated security alerts and an internal review.

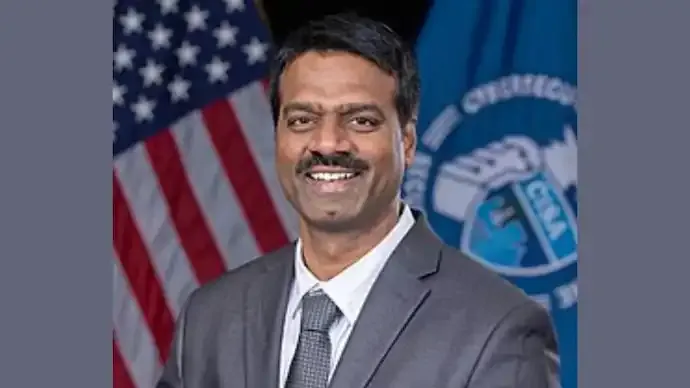

According to reports, Madhu Gottumukkala, the acting director of the Cybersecurity and Infrastructure Security Agency, uploaded contracting and cybersecurity-related materials to the artificial intelligence platform during the summer of 2025 for work-related purposes.

While the documents were not classified, they were labelled “For Official Use Only,” a designation that restricts public dissemination due to their sensitive nature. Officials familiar with the matter said the uploads activated internal safeguards designed to prevent government data from being exposed beyond authorised environments.

The incident prompted senior officials within the Department of Homeland Security to initiate an internal review in August to assess whether any federal systems or infrastructure were compromised as a result of the uploads. The findings of that review have not been disclosed publicly.

The episode has drawn particular attention because Gottumukkala leads the agency responsible for safeguarding federal networks against sophisticated cyber threats, including those attributed to state-backed actors linked to Russia and China. His role places him at the forefront of efforts to prevent breaches, espionage, and digital sabotage targeting critical government infrastructure.

Gottumukkala, who is of Indian origin, has an extensive academic and technical background in cybersecurity and information systems. He holds a doctoral degree in Information Systems, along with advanced qualifications in engineering management and computer science, and has spent years working at the intersection of technology policy and national security.

Reports indicate that Gottumukkala had been granted special permission to use ChatGPT, a tool that remains restricted for most employees within the department due to concerns about data retention and potential exposure. Information entered into public versions of generative AI platforms may be stored and used to improve underlying models, a factor that has made government agencies cautious about their use for official work.

CISA officials have sought to downplay the seriousness of the episode, stating that the use of ChatGPT was authorised, limited in scope, and time-bound. According to official statements, Gottumukkala last accessed the platform in mid-July 2025 under a temporary exception, and the agency continues to block access to such tools by default unless specific approval is granted.

The matter has gained added scrutiny due to earlier controversies surrounding Gottumukkala’s leadership. Previous reports indicated internal tensions within the agency, including an episode in which multiple staff members were placed on leave following disputes over internal security procedures. Gottumukkala has publicly disputed claims made about him in that context, rejecting characterisations he said were inaccurate.

The incident underscores the growing challenges governments face as artificial intelligence tools become more widely available. Even as agencies explore how AI can improve efficiency and analysis, the episode highlights the risks of integrating such platforms into environments that handle sensitive information.

As policymakers and security officials continue to grapple with the balance between innovation and protection, the case has renewed calls for clearer guidelines on the use of generative AI within government systems — particularly by those tasked with defending them.

76

Published: Jan 29, 2026